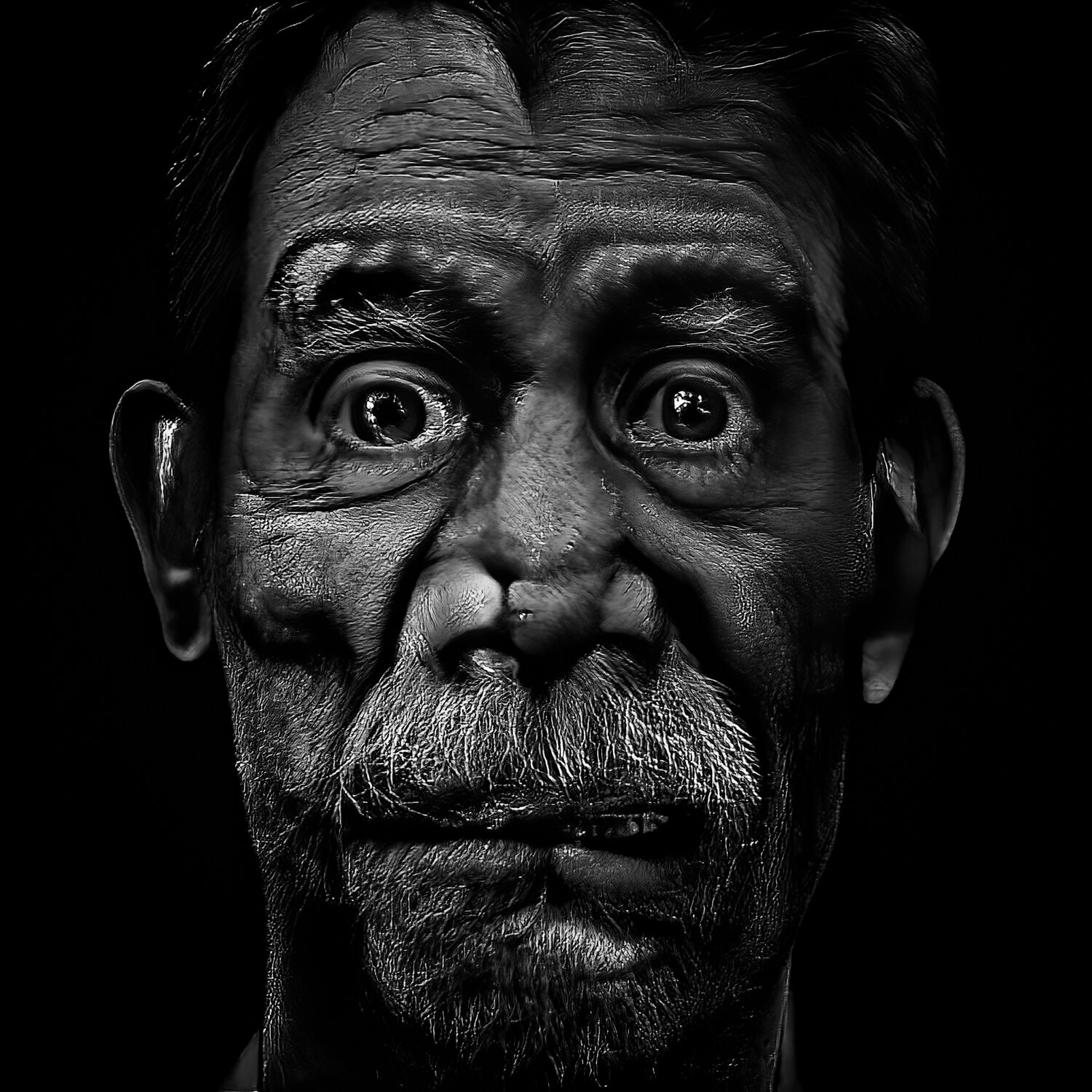

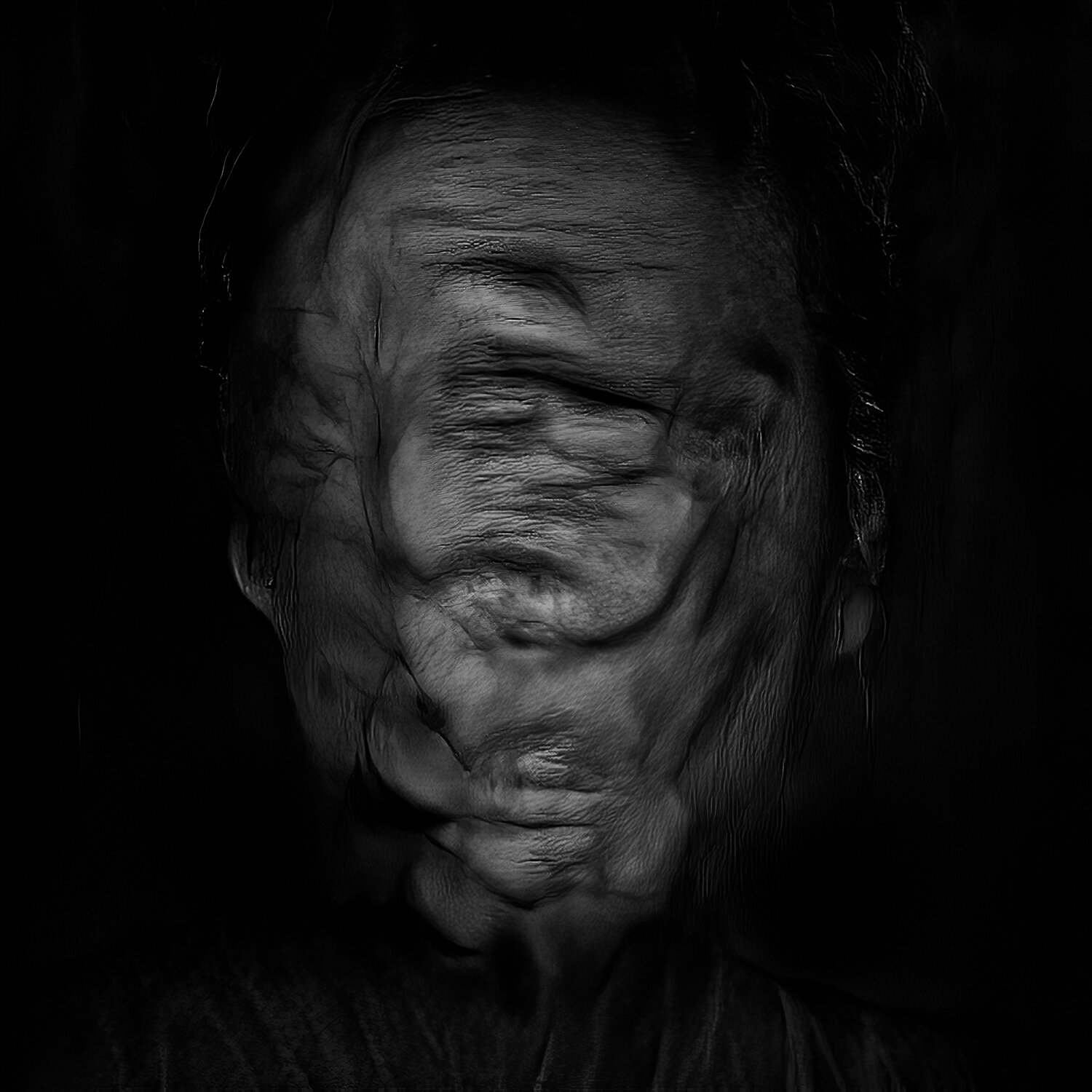

Human Trials

We live in a world of ubiquitous networked communication and generate a tremendous amount of data as many of our interactions are digitized from shopping and entertainment to socializing and medical diagnosis. Algorithms sift this data to make sense of who we are, and assign to us a gender, ethnicity, age, sexual orientation, education level, class, marital status, status as parent, reliability as an employee, citizenship, locations frequented, entertainment preference, shopping preference, and depending on who is doing the assignment, identification as a terrorist. The invisible algorithmic categorization is used to shape our lives, often without us knowing. These can come in the form of what we can easily see or buy through personalization or recommendation engines, or they come in the form of decision engines such as whether we should be called for the job interview, or if we should be fired for low productivity, or whether our mortgage loan application will be approved. Often the underlying data and the algorithm are generating distorted views of their subjects - because the data and the algorithm can be incomplete, inaccurate or biased. In this project I visualize this distortion based on possible portraits of people who do not exist, created using artificial intelligence that is retrained on a set of my photographs produced in the studio using light painting.